What is Edge Ai And What Are We Talking About?

Edge AI is a way to process and analyse the environment that evolves the computation of deep learning algorithms at the edge of the network. This is made possible with the use of cheap devices that are specifically built for the job at hand.

From my research, I managed to deepen my knowledge and find valuable information related to the topic that could be useful to anyone interested in this area.

A More In Depth Talk About Edge Ai

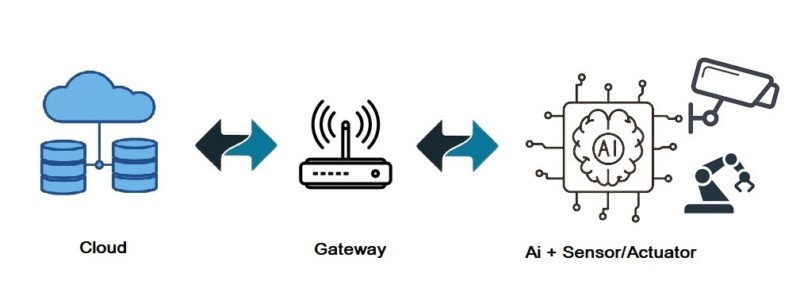

Advances in technology allowed the use of devices that could be placed at the edge of the network in order to obtain data from different sensors and thereby determine whether or not to activate actuators. We were in the age of the internet of things (Iot). A breakthrough was achieved with the use of devices that can analyze the environment in real time using artificial intelligence (Aiot).

With the use of edge AI devices, we now have the ability to process and store information near the place of measurement because they are made up of processors that are adapted to perform the complex calculations of deep learning algorithms. All of this looks good, but there are still some incomvinients. These devices don’t have the computing capability of large data centers like the Cloud, so there is still a need for them to be used in the early stages of the deep learning process, like in the training of the convolutional neural network.

Now we come to the good stuff: the benefits of using this type of technology.

- Reliable connectivity: After the training, the data gathering and processing devices do not need to communicate with the cloud; all is done in situ.

- Reduced latency and better bandwidth efficiency: Hardware communication speed his improved.

- Increased security and privacy: There are fewer security vulnerabilities when the data is being processed at the edge of the network.

The applications for Edge Ai devices are endless. We simply have to use our imagination to find a problem that needs solving. Anyway, there are already interesting real-world applications that can help fellow humans. Due to the low cost of future devices, I think they will be everywhere in the future, ranging from autonomous driving and real-time gaming in the industry to diagnostics in healthcare. I even found an interesting project related to smart agriculture where there is a system that uses ultrasonic sounds to repel animals in order to protect crops. Drones are already being used in advanced irrigation systems, plant disease recognition, and pest identification.

How does Edge Ai Work?

I will leave the math at rest because I think the process behind the detection of a “simple” object classification is too much even, for someone with background in calculus, probabilities, linear algebra, etc.

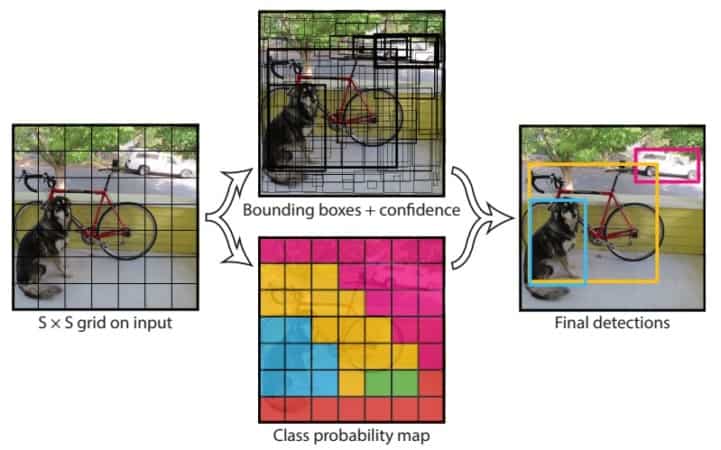

In the case of object detection, I think the simplest way to explain it is to see that when we take a shot with a camera, the image is divided into small squares. For each square, the convolutional neural network does its calculations and outputs the probability of the object, animal, plant, etc., being in a certain area of the screen. If the probability is higher, in a region, it gives the algorithm confidence that the object is in a certain area of the picture. Then the algorithm draws a box with a probability value around the object.

All of this requires a lot of math, and in the case of small devices, such as those used in edge ai applications, not all of those calculations can be performed by the board’s processor(s).In a way, we can say that there are three fases:

- Training: If we want to identify a dog in a photograph, first we have to take hundreds of photos with variations in order for the convolutional neural network to be properly trained. This process is normally made, using the full power of computers in cloud data centers.

- Test: We test the convolutional neural network to see if it can identify a dog when we show it a picture. Small adjustments can be made to the convolutional neural network.

- Deployment: In this final stage, the model is transferred to the development board, and we can run it to perform object identification in real time and use perfidentials like a microcontroler to give orders to an actuator if we want.

Below, we can see the process used by the usual deep learning frameworks. This one is called “You Only Look Once” (Yolo). Running a real-time object detection algorithm involves powerful devices, so they normally use platforms like OpenCV, TensorFlow, Yolo, and Tiny-Yolo. The last one is a light-weight version of Yolo that works well on boards like the Nvidia Jetson Nano and Maixduino.

Hardware Arquitectures For Edge Ai

The hardware of the development boards for Edge Ai can vary in technology. At the moment, manufacturers use CPU/GPU, FPGA, and ASIC-based technology as artificial intelligence accelerators. Each of them has pros and cons, and I take the liberty of explaining.

- CPU/GPU: These architectures have limited flexibity, and the focus should be on software optimization. Also, they are power hungry.

- FPGA: Having high flexibility, these accelerators are suited to run applications involving deep learning.

- ASIC: These purpose-built A.I. accelerators are energy efficient and have better performance.

Some Development Boards For Edge Ai

In the market, there are development boards with A.I. Accelerators that can perform operations such as object detection and classification and much more. Some of the boards also have the ability to do voice recognition with their in-board microphone and a port to connect an external speaker to play some music(?).

The Maixduino is a low-cost board that has a chip to do specific artificial intelligence operations and an ESP32 microcontroller, which is a bonus. The most famous Nvidia development board is the Jetson Nano, with a GPU architecture. The Intel Neural Computing Stick can be used in conjunction with a Rasberry Pi or any other small PC. Google sells the Coral development board and parfrasing the marketing team, “Coral is a complete toolkit to build products with local AI.” Our on-device inferencing capabilities allow you to build products that are efficient, private, fast, and offline.

In the case of image image manipulation, we can do object identification, pose estimation, image segmentation, and much more. In audio-derived projects, we can do key phrase detection.

References

- Enabling Design Methodologies and Future Trends for Edge AI: Specialization and Co-design

- Green IoT and Edge AI as Key Technological Enablers for a Sustainable Digital Transition towards a Smart Circular Economy: An Industry 5.0 Use Case

- Design, Development and Evaluation of an Intelligent Animal Repelling System for Crop Protection Based on Embedded Edge-AI

- Embedded Development Boards for Edge-AI: A Comprehensive Report

- Maixduino

- You Only Look Once: Unified, Real-Time Object Detection

- Coral.ai

- Nvidia developer page